Poké-Pi-Dex

About the Project

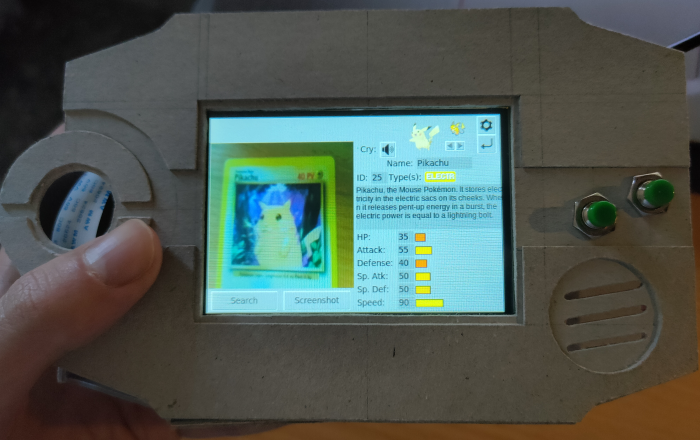

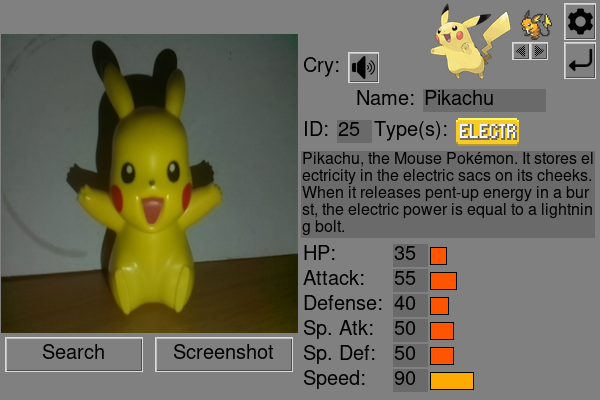

Poké-Pi-Dex is a device that emulates a Pokédex, capable of classifying first-generation Pokémon from a picture. It runs on a Raspberry Pi4 with a PiCamera and additional components, all housed in a custom-made cardboard case 🌱.

TryKatChup and I developed this project as part of the Digital Systems M course at Alma Mater Studiorum, University of Bologna.

The Idea

In order to take the exam, students had to develop a project involving embedded programming or computer vision, write a report about it and make a presentation for the professors.

One time, when we were still brainstorming the topic of our project, we decided to order McDonald’s and we noticed it was giving Pokémon cards with each Happy Meal. We ended up having like 5 of them, and while we were eating, we came up with the idea of the Pokédex.

We both grew up with Pokémon and have played many different games from the franchise, so we tought a real, somewhat-functional Pokédex would be just too cool.

The Hoenn Pokédex in Generation VI

Development

The development of this project involved many different steps. The goal was to create a classificator capable of recognizing (i.e. labeling) a Pokémon from an image, and providing different information about it. In particular, the image could represent a Pokémon in different forms, such as peluches, dolls, action figures, cards, etc.

Dataset

When we started the project, the number of Pokémon was already huge, with about 900 different species. Making a classificator for such a big set of labels would have been simply impossible, given the fact that we expected to encounter some issue and not to find too much data. Therefore, we opted for a smaller subset, limiting the scope to “just” the 151 ones from 1st generation.

We started by searching a Pokémon dataset with some labeled images, but we ended up finding only one with about 7000 total. We still considered ourselves lucky, but upon a further investigation, we discovered that it was dogsh*t™: many picture were labeled wrong and they didn’t have the same aspect ratio.

Since we already made up our minds about making this project, we decided it was worth to try and make our own dataset, and we ended up collecting about 12.000 images, which we accurately selected and resized to uniform dimensions.

If you know a thing or two about classificators, I know what you’re probably thinking: “how the foongus did you guys expect to make a working classificator with 151 labels and just 12.000 images???”

BUT, since it was our first time with machine/deep learning, we didn’t exactly know yet, and at least we had fun trying.

Finding a dataset for Pokémon information, as expected, was much easier, thanks to the huge community and fanbase: we found a wonderful GitHub repository (fanzeyi/pokemon.json) containing different JSON documents with information and sprites for all the first 809 Pokémon. We extracted the first 151 and we extended it and customized a little.

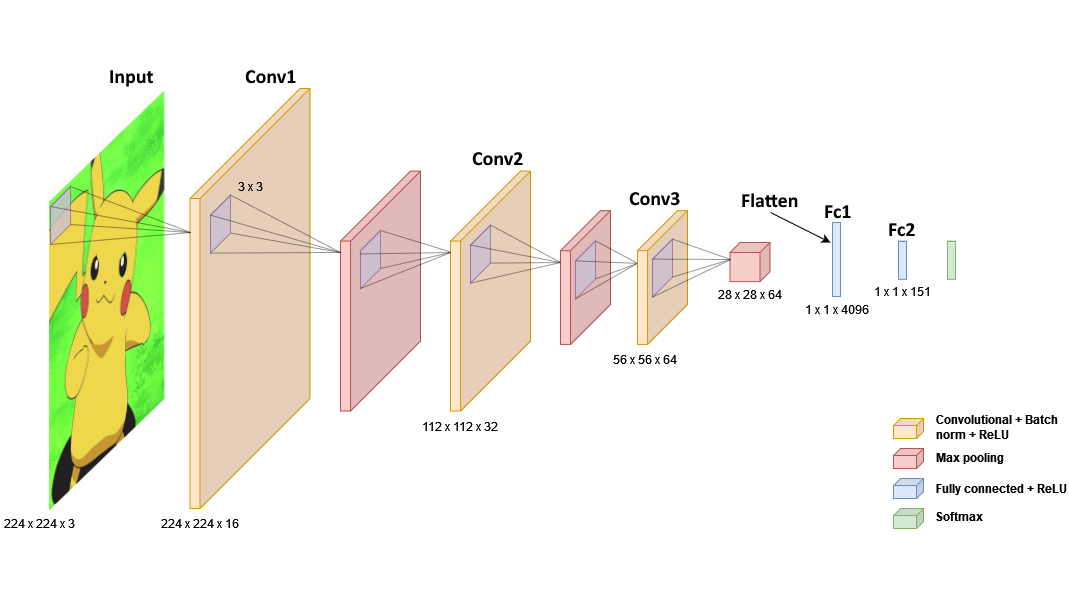

Classification Model

To implement the classificator, we decided to adopt a Convolutional Neural Network (CNN), which we structured as shown in the following picture:

- Input layer: takes a picture with aspect ratio 224x224 and 3 color channels (RGB).

- 3 Convolutional layers: with unitary stride, convolutional filters with increasing size (16, 32, 64) and kernel size equal to 3. These are useful for feature extraction. For each one the model applies:

- Batch normalization;

- ReLU;

- Max pooling 2D.

- Flatten: reduces the input dimensions to 1.

- 2 Fully connected layers: apply linear transformations via a weighted matrix.

- Softmax: last activation function that converts the score of each class in a probability.

To train the model we divided the dataset in:

- traning set (80%), used to learn to recognize the Pokémon classes;

- validation set (10%), used for fine tuning hyperparameters;

- test set (10%), used to have some examples to evaluate the model.

Training

To compile the model, we used the Adam (ADAptive Moment estimation) optimizer, which is extremely robust and tends to converge even with small variations in hyperparameters.

For evaluating the loss, we used SparseCategoricalCrossentropy from Keras

model.compile(

optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(),

metrics=['accuracy']

)

We adopted early stopping to stop the training when the model starts to degradate:

callback = tf.keras.callbacks.EarlyStopping(monitor='val_loss', patience=10, restore_best_weights=True)

Then, we ran the model fit with epochs=100 and batch_size=64:

history = model.fit(

train_dataset,

epochs=100,

callbacks=[callback],

steps_per_epoch=train_len // 64,

validation_data=val_dataset,

validation_steps=val_len // 64

)

The training was performed on a GPU Nvidia GTX 1060 6GB, and took about 17 minutes.

Training Results

| Train Loss | Train Acc | Val Loss | Val Acc | Test Loss | Test Acc | Epochs |

|---|---|---|---|---|---|---|

| 0.0465 | 0.9901 | 0.0266 | 0.9929 | 0.0262 | 0.9933 | 72 |

Raspberry Pi

To implement the physical device, we needed a compact system capable of running a TensorFlow interpreter, capturing pictures, and displaying the results on a screen. A Raspberry Pi was the perfect fit for our needs, and since we already had one, we decided to go with it.

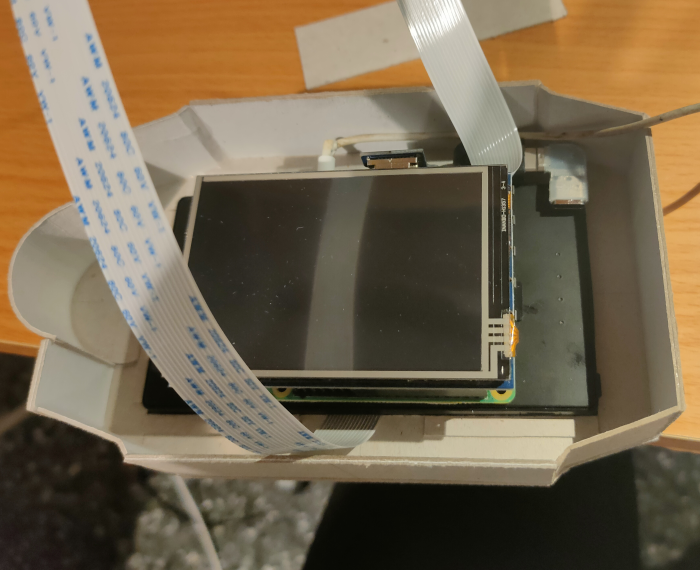

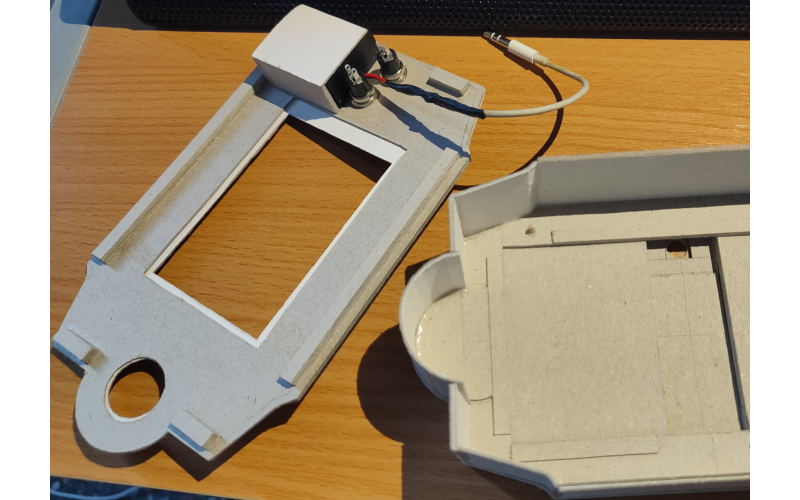

Hardware and Components

We already had a Raspberry Kit which included:

- Raspberry Pi4 Model B

- micro SD 32GB class 10

- power supply

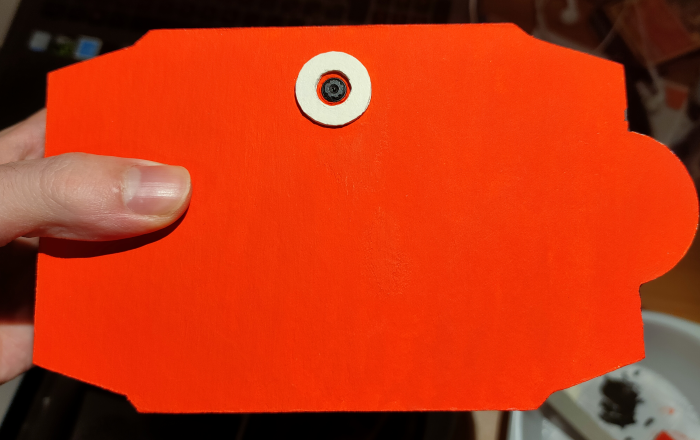

Additionally, we bought the following components:

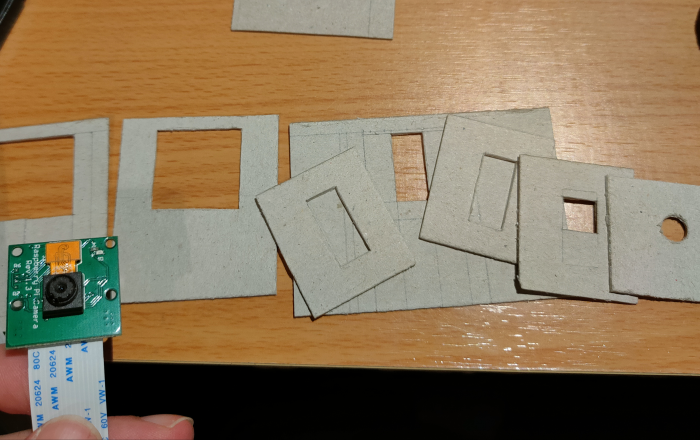

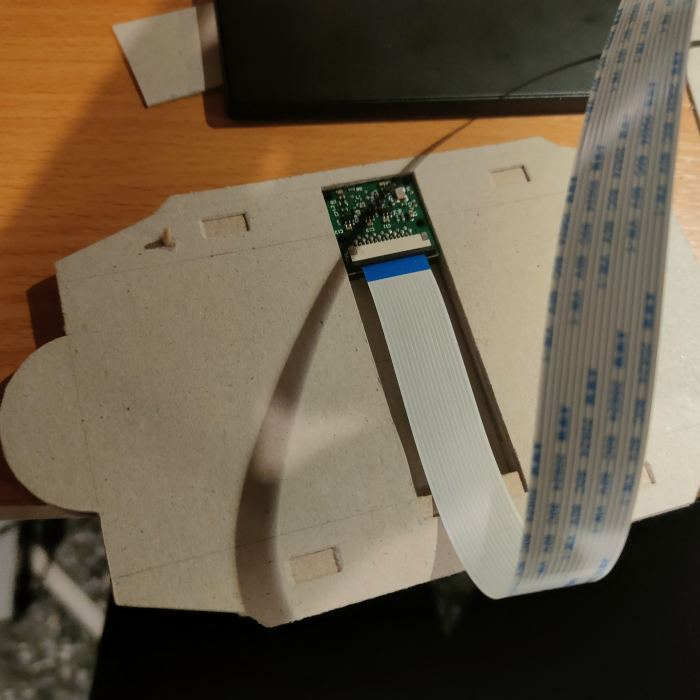

- PiCamera Rev 1.3 (5MP, 1080p)

- LCD display 3.5" HDMI with resistive touch screen

- mini speakers

- push buttons

- ultra-thin powerbank

Operating System

For the operating system we chose Raspberry Pi OS 32-bit, the official Linux distribution for Raspberry Pi, which includes firmware and drivers needed to interact with peripherials. Furthermore, this OS supported Tensorflow Lite 2.4, which we needed to run our CNN.

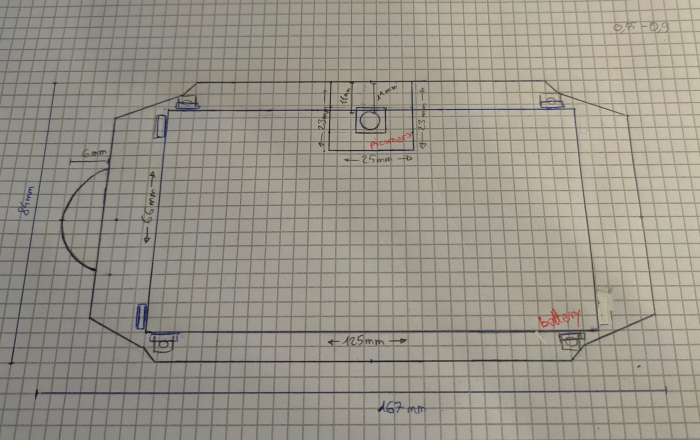

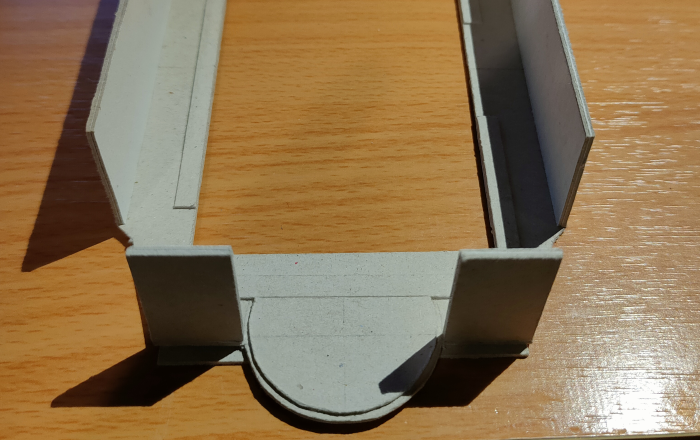

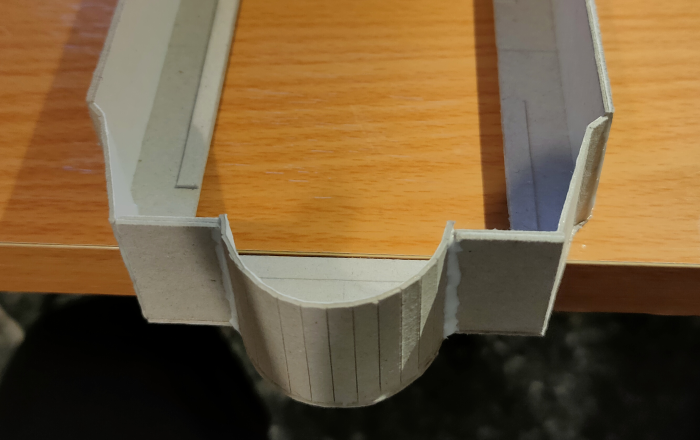

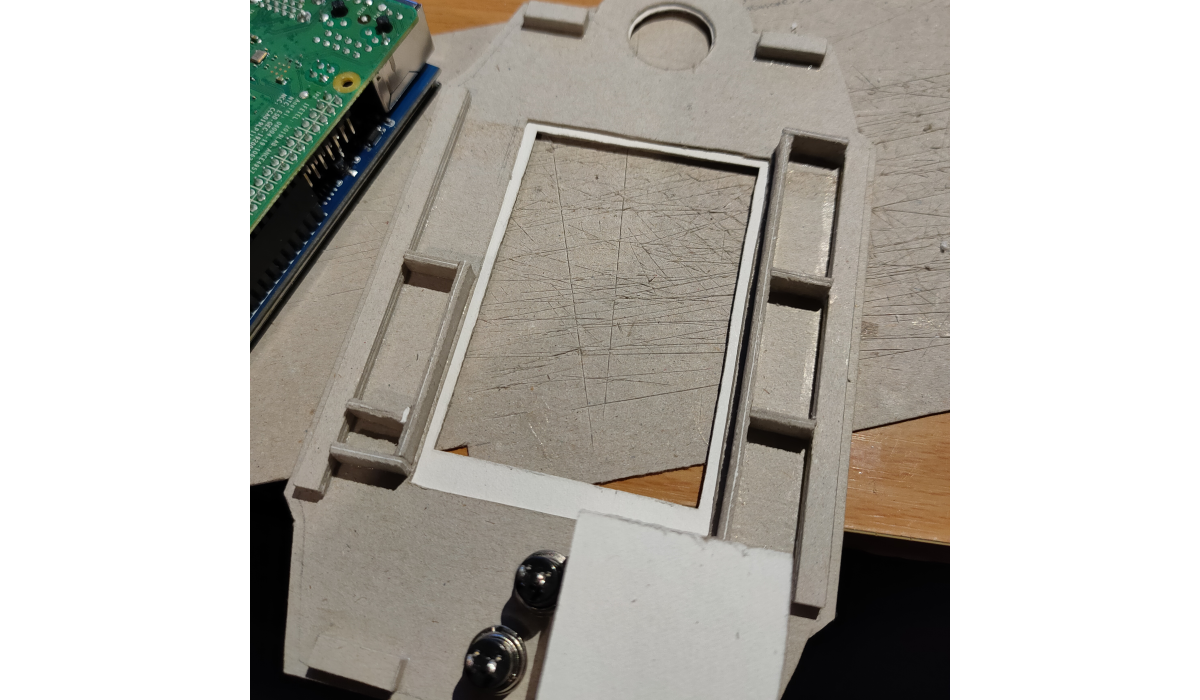

Carboard Case

To create our Pokédex, we decided to make a cardboard case 🌱. We took inspiration from the Hoenn Pokédex in Generation VI, keeping in mind that it would need to house the Raspberry Pi and all of its components.

Python Application

Eventually, we developed a rather simple Python application which loads the trained model and runs the prediction: the application takes a picture from the PiCamera video stream and feeds it to the model which, via the k-max algorithm, returns the first most probable label. Then, by quering a dictionary, the application displays the Pokémon information.

Dependencies

The application was developed using Python 3.x and the following libraries/modules:

- Tkinter: a robust windowing toolkit used for creating the graphical user interface (GUI).

- TensorFlow: an end-to-end open-source machine learning platform, which was utilized to load and run the classifier.

- OpenCV: a comprehensive computer vision library, used to handle video input from the PiCamera.

Plus other libraries such as Pillow, Pygame, Gpiozero, Numpy, Sklearn, etc.

PiCamera Calibration

The PiCamera, like any device capable of capturing images, produces images with some distortion due to the lens. This phenomenon can be mitigated by calculating the distortion coefficients and the camera matrix.

OpenCV provides a tutorial and Python code for finding the intrinsic and extrinsic properties of a camera, as well as for undistorting images: this process is called Camera Calibration. We followed this tutorial and saved our parameters to a file.

To correct the images, we use a function that applies these parameters to each image taken with the PiCamera.

import cv2

import numpy as np

def rectify_image(img):

camera_matrix = np.load("resources/camera_matrix.npy")

dist_coefs = np.load("resources/distortion_coefficients.npy")

h, w = img.shape[:2]

# Undistort the image

new_camera_matrix, roi = cv2.getOptimalNewCameraMatrix(camera_matrix, dist_coefs, (w, h), 1, (w, h))

dst = cv2.undistort(img, camera_matrix, dist_coefs, None, new_camera_matrix)

# Crop and Return the image

x, y, w, h = roi

dst = dst[y:y + h, x:x + w]

return dst

Classification

The classification process follows these steps:

The application constantly capture frames from the PiCamera and displays them on the UI:

def update(self): if self.update_video: ret, frame = self.video.get_frame() if ret: self.photo = ImageTk.PhotoImage(image=Image.fromarray(frame).resize(image_size, Image.ANTIALIAS)) self.canvas_video.create_image(res_width/4, res_width/4, image=self.photo, anchor=tk.CENTER) self.window.after(self.delay, self.update)When the user select “Search”, the application saves the current frame to memory and corrects it applying rectification parameters:

def search(self): ret, frame = self.video.get_frame() if self.var_flip_image.get(): frame = np.flip(frame, axis=1) cv2.imwrite("frame.jpg", cv2.cvtColor(frame, cv2.COLOR_RGB2BGR)) frame = rectify_image(frame) cv2.imwrite("frame_undistorted.jpg", cv2.cvtColor(frame, cv2.COLOR_RGB2BGR)) (pkmn, confidence) = pc.predict_top_n_pokemon("frame_undistorted.jpg", 1) pkmn = str(pkmn)[2:-2] confidence = str(confidence)[1:-1] self.load_pokemon(pkmn)Then, the classificator runs the k-max prediction on the frame:

def predict_top_n_pokemon(image_filename, num_top_pokemon): # Predicts num_top_pokemon from image_file, using a tflite model interpreter = tf.lite.Interpreter("resources/model.tflite") interpreter.allocate_tensors() # Get input and output tensors input_details = interpreter.get_input_details() output_details = interpreter.get_output_details() # Load image and convert it to tensor if tf.__version__ == "2.6.0": # Open image with keras.utils from tensorflow.keras.utils import load_img, img_to_array img = load_img(image_filename, target_size=(224, 224)) #"./evee_1.jpg"5 img = img_to_array(img, dtype=np.float32) else: # Open image with PIL.Image img = Image.open(image_filename) img = img.resize((224, 224), Image.ANTIALIAS) img = np.asarray(img, dtype=np.float32) img /= 255 img = np.expand_dims(img, axis=0) input_tensor = np.array(img, dtype=np.float32) # Load TFLite model and allocate tensors interpreter.set_tensor(input_details[0]['index'], input_tensor) interpreter.invoke() # Get output output_data = interpreter.get_tensor(output_details[0]['index']) # Get label encoder label_encoder = get_label_encoder() # Get best num_top_pokemon (top_k_scores, top_k_idx) = tf.math.top_k(output_data, num_top_pokemon) top_k_scores = np.squeeze(top_k_scores.numpy(), axis=0) top_k_idx = np.squeeze(top_k_idx.numpy(), axis=0) top_k_labels = label_encoder.inverse_transform(top_k_idx) return top_k_labels, top_k_scoresThe application queries the data dictionary and displays the Pokémon information, by populating the UI components:

def load_pokemon(self, pkmn_id): try: self.loaded_pokemon = self.pokemon_repo.pokemon[pkmn_id] self.load_image() self.load_name() self.load_id() self.load_types() self.load_description() self.load_stats() self.load_evolutions() self.load_cry() if self.settings.descr_voice: self.play_description() except KeyError: self.loaded_pokemon = None

Demo

Team Members

| Karina Chichifoi | Michele Righi |